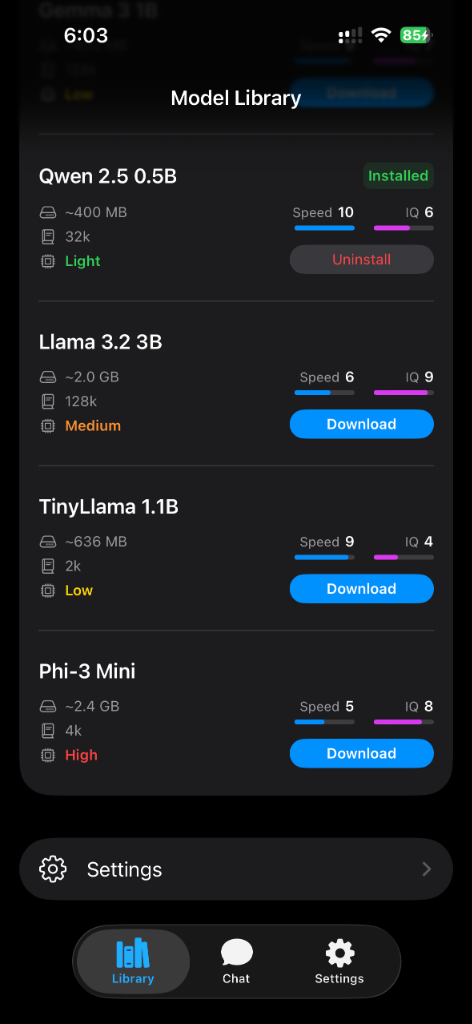

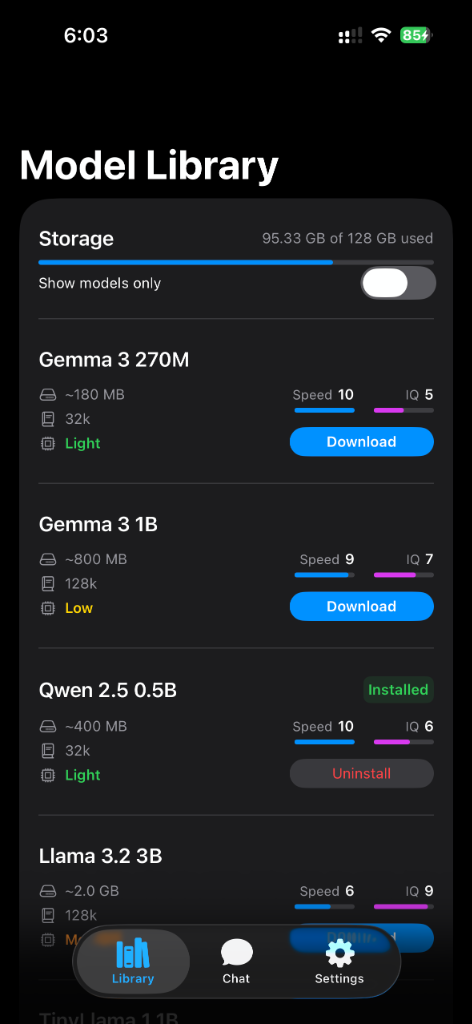

Model Library

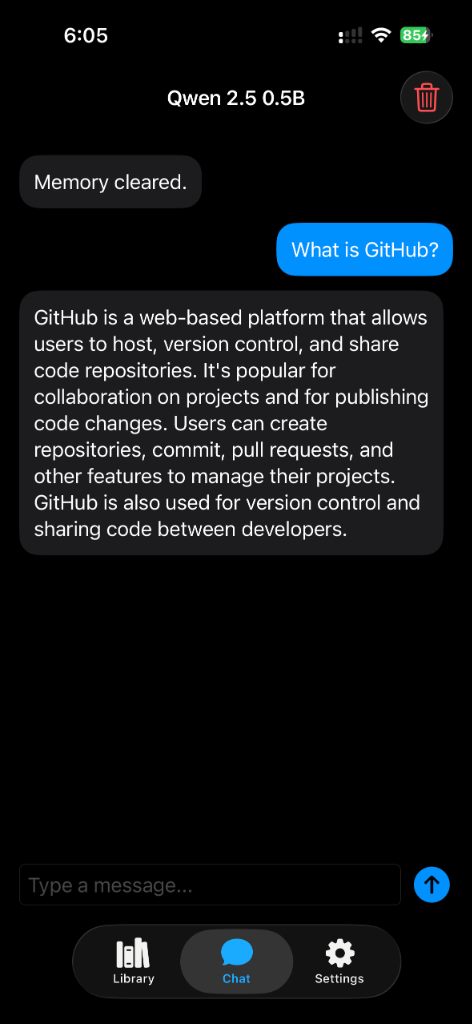

PocketLlama is a fully local AI chat application for iOS and iPadOS. Run powerful LLMs like Gemma 3, Llama 3.2, Qwen 2.5, and Phi-3 directly on your device with no internet required.

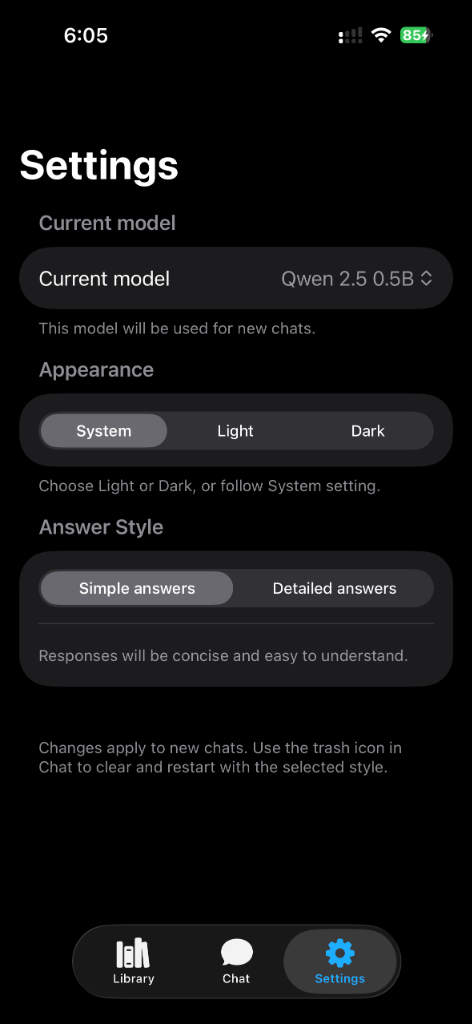

A clean, modern interface with smooth animations and intuitive controls.

Model Library

Storage & Models

Chat Interface

Settings

PocketLlama was built to provide a private, offline AI assistant that respects user privacy. Unlike cloud-based AI services, all processing happens locally on your device. This means: